Microsoft Copilot is powerful. But as more organizations embrace AI, it's crucial to ensure that Copilot doesn’t access files it shouldn’t. If you’ve already set up sensitivity labels in your organization using Microsoft Purview, you’re likely familiar with how they help manage access and sharing. But did you know you can also control how Copilot interacts with this information?

With sensitivity labels, you classify and protect data based on its sensitivity. You can configure labels with varying protection levels and enforce them using tools like Data Loss Prevention (DLP). And now, with the EXTRACT permission, you can control Copilot’s access to these files—without removing access for users or setting up a separate environment.

The result? Your users continue working with files as usual, while Copilot is blocked from reading or interpreting the contents.

In this blog, I’ll walk you through how to set this up in your organization—and how it behaves when using Copilot.

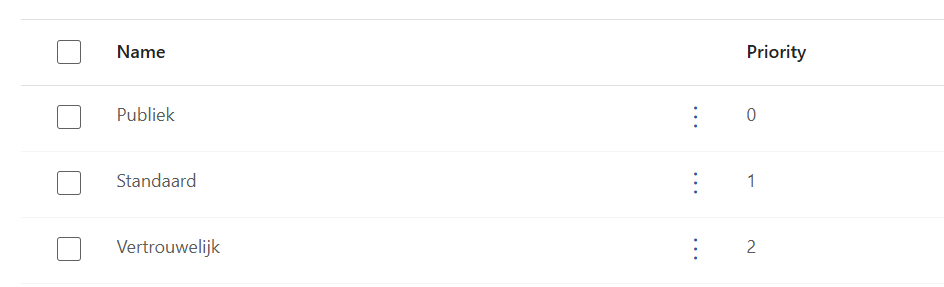

Let's go to Purview and open up Information Protection. If you've already set up sensitivity labels inside, this screen should look familiar.

Start by reviewing the sensitivity labels already in use across your organization.

In my case, I have three labels already set up. For this example, I’ll use the label Vertrouwelijk (Confidential).

I want to ensure Copilot cannot process files labeled as confidential. Depending on your organization’s needs, you may choose to apply this restriction to an existing label or create a new one specifically designed to exclude Copilot access.

When creating or editing a label, focus on the Access control section. This is where you configure who can access the file and what they can do with it.

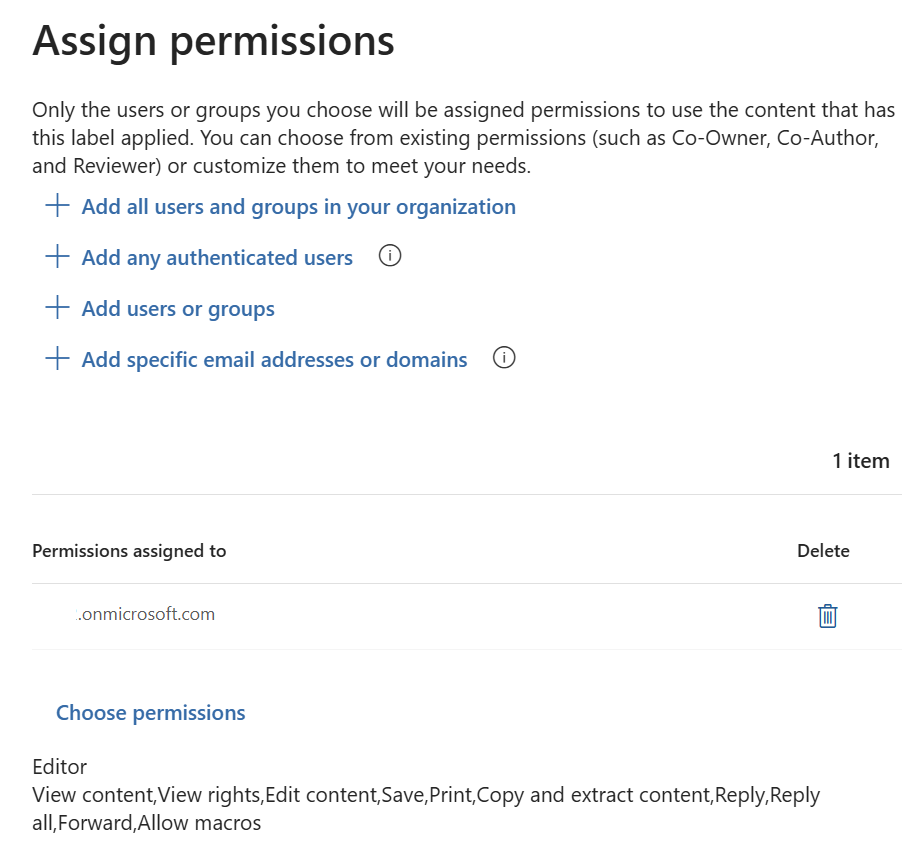

Opening the Assign Permission tab in your settings, you will see the following options:

In my case, I’ve granted all users in the tenant editor access to files with this label. If you select “Choose permissions,” you can define exactly which roles and rights should apply.

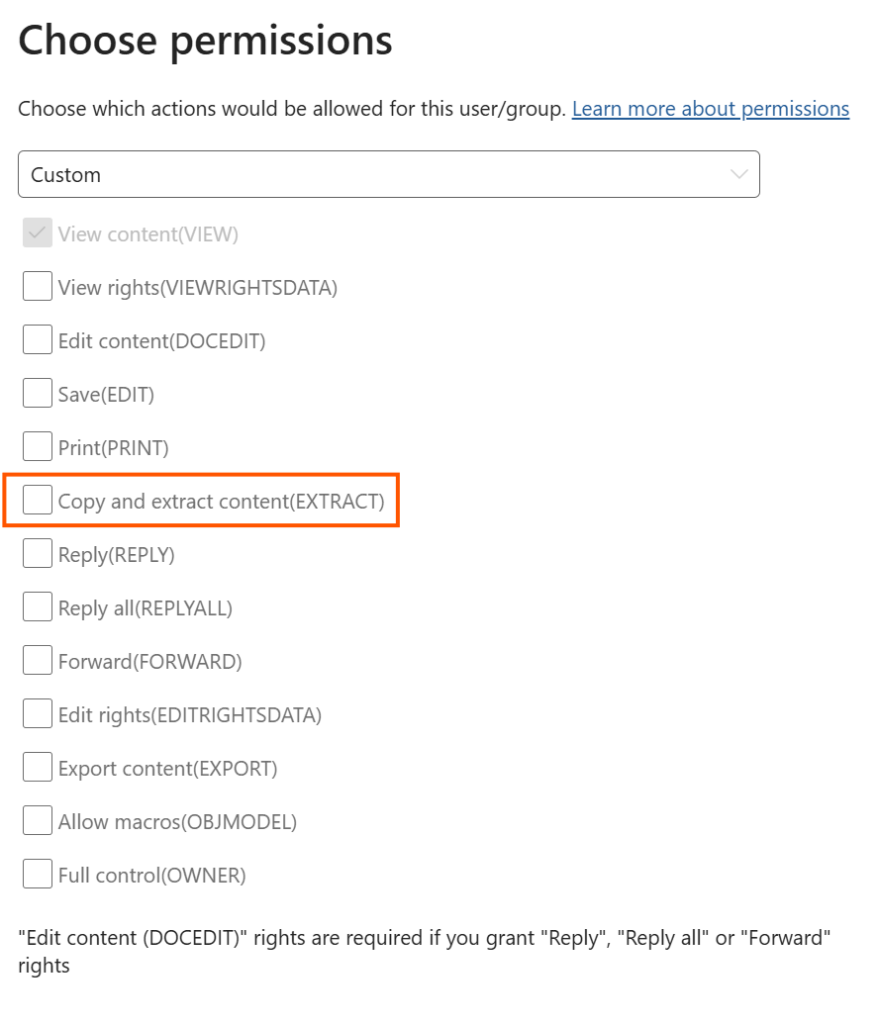

Let’s zoom in on customizing these roles.

You have two options:

As shown below, this is where you’ll want to focus on the EXTRACT permission.

EXTRACT determines if content can be accessed by Copilot. If it’s disabled, Copilot won’t be able to read or interpret the file, but the user can still open it and work with it (if you've set the rights correctly).

You can test this by applying the label to a document and asking Copilot to summarize it. If set up correctly, Copilot will respond that it cannot access the file content.

Once the label is applied and EXTRACT is disabled:

Document labeling in your organization is no longer just about compliance, but it’s now part of your AI governance strategy. As tools like Microsoft Copilot become integrated into daily workflows, protecting sensitive data becomes even more critical.

With the EXTRACT permission in Microsoft Purview, you now have precise control. You can block Copilot from reading content in files related to HR, Finance, Legal, or Management while still allowing employees to work with those files.

Hi, I'm Ziggy Itjoejaree. I work as Modern Workplace Engineer and have a big interest in Microsoft Purview, Data, AI and compliancy. In my daily job, I am mostly helping customers transform and migrate to a Cloud work environment.