With the rise of all the AI tools online, from Copilot to ChatGPT, it's becoming harder to track where sensitive data ends up. While blocking access might sound like the safest route, most organizations know that’s not workable for the end user, especially in a production environment. That's why DSPM for AI gives you insights into how your data is currently used in your organization.

I've introduced DSPM for AI in Purview before in another blog. If you haven't read it yet or want to know how to set it up, you can read it here.

In this new blog, we’ll explore how Data Assessments can help you spot potential oversharing before it becomes a real problem. We're not talking about enforcing DLP policies or blocking Copilot but about seeing what's really happening with your data.

I’ve heard this many times before: organizations that are confident they know how SharePoint is used and where their data lives. But do you really know how users are interacting with the data, especially sensitive data?

If you've already started with information protection, like labeling, you probably have some visibility into what sensitive info exists in your environment. But with Data Risk Assessments, you get a much clearer picture of where sensitive data might be exposed — including usage across SharePoint and Teams.

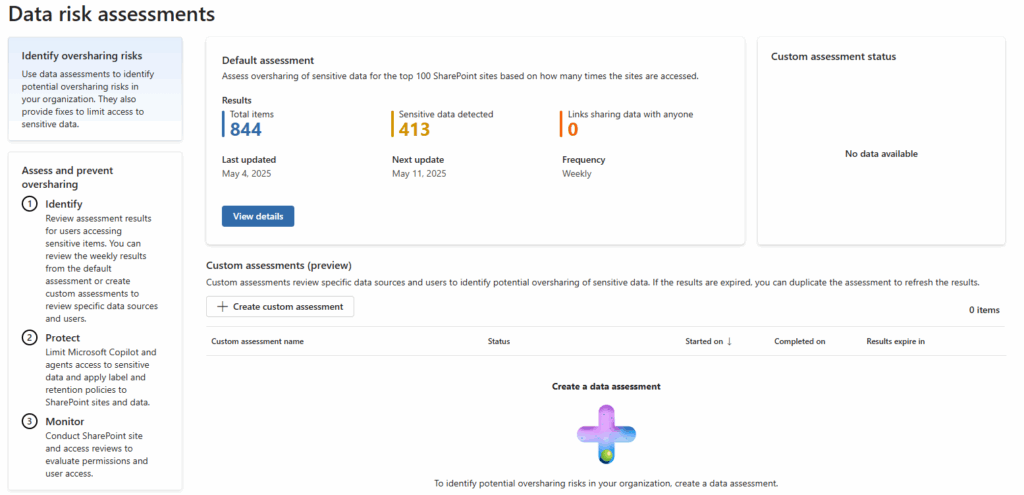

See the dashboard of Data risk assessments below:

As you can see, in my developer tenant I have a few hundred items, and nearly half of them contain sensitive data. Since this is my developer environment and external sharing is blocked by default, no files are shared via anonymous links. Phew (though I doubt anyone needs my demo data anyway 😉 ).

You don’t need custom configurations to get started. Even without your own sensitivity labels, Purview uses built-in detection to surface insights. That means you can start identifying risk areas right away, without any active policies.

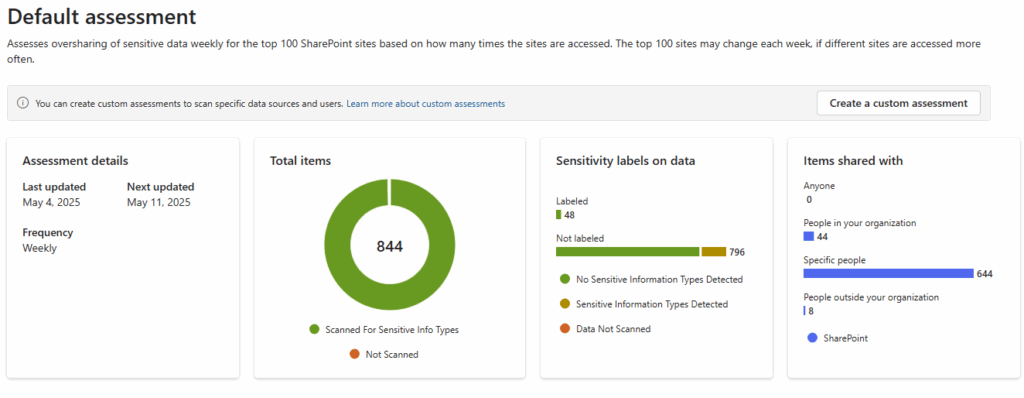

Now, let’s take a closer look at what’s shown in the Default assessment.

This one runs weekly and automatically scans the top 100 most accessed SharePoint sites in your environment. The list changes based on access frequency, so you always see the most relevant activity.

Here’s what the default view reveals:

It gives you an overview of how many files are scanned, labeled, or contain sensitive data. With this info, you can quickly spot unlabeled items that may need extra classification and review how files are shared internally or externally.

Purview DSPM for AI breaks down oversharing insights per site. For each site, you can see how many sensitive items were found, how often items were accessed, and how the sharing settings are configured.

In the example shown, all three SharePoint sites had sensitive data shared with specific people, some with dozens of exposed items. This makes it easy to prioritize where access reviews or follow-up actions are needed.

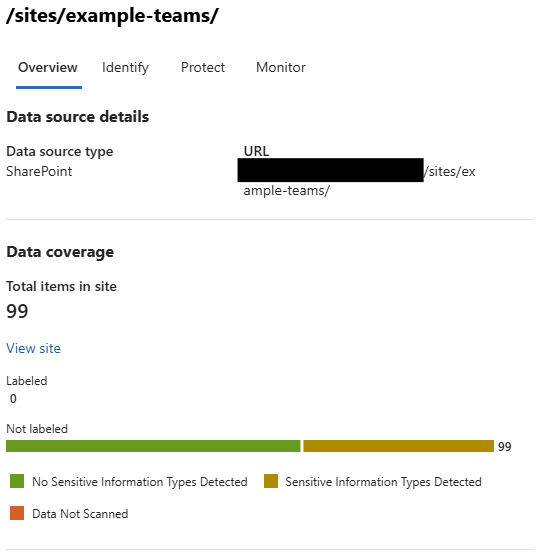

When opening a specific site in Data Risk Assessments, the Overview tab gives you a quick snapshot of data exposure: how many items are labeled or not labeled, and whether sensitive info types (SITs) were detected using Purview’s built-in scanning.

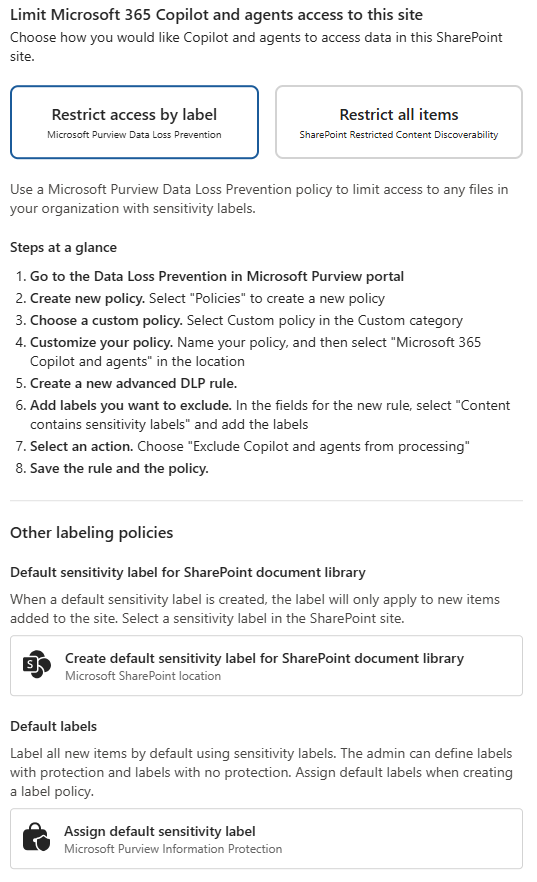

The Protect tab shows what you can do based on the risk findings. Specifically, it lets you control how Microsoft 365 Copilot and other agents access data in that site.

You get two options:

Each option walks you through the steps. For example, if you choose to restrict by label, you’ll be guided through setting up a new DLP policy that excludes Copilot. If you go for full restriction, PowerShell steps are provided to block access altogether.

This tab is where visibility turns into action, so you can protect data before Copilot or any other agent interacts with it in DSPM for AI.

From my perspective, Data Risk Assessments in DSPM for AI are a great way to start understanding your data exposure without having to enforce anything upfront. It gives you just the right amount of visibility to see where sensitive data lives, how it's shared, and where things might go wrong. For me, it’s not about locking everything down from the start, but thinking about how to limit the use of Copilot or other AI agents without limiting everything in real usage. You can't protect what you can't see, and this helps you see clearly, before taking the next step. What do you think about this reports?

Hi, I'm Ziggy Itjoejaree. I work as Modern Workplace Engineer and have a big interest in Microsoft Purview, Data, AI and compliancy. In my daily job, I am mostly helping customers transform and migrate to a Cloud work environment.